Two intrinsic problems with the current implementations of AI is that they are insanely resource-intensive and require huge training sets. Neither of those is directly a problem of ownership or control, though both favor larger players with more money.

And a third intrinsic problem is that the current models with infinite training data have been proven to never approach human language capability, from papers written by OpenAI in 2020 and Deepmind in 2022, and also a paper by Stanford which proposes AI simply have no emergent behavior and only convergent behavior.

So yeah. Lots of problems.

While I completely agree with you, that is the one thing that could change with just one thing going right for one of all the groups that work on just that problem.

It’s what happens after that that’s really scary, probably. Perhaps we all go into some utopian AI driven future, but I highly doubt that’s even possible.

Same as always. There is no technology capitalism can’t corrupt

For some reason the megacorps have got LLMs on the brain, and they’re the worst “AI” I’ve seen. There are other types of AI that are actually impressive, but the “writes a thing that looks like it might be the answer” machine is way less useful than they think it is.

most LLM’s for chat, pictures and clips are magical and amazing. For about 4 - 8 hours of fiddling then they lose all entertainment value.

As for practical use, the things can’t do math so they’re useless at work. I write better Emails on my own so I can’t imagine being so lazy and socially inept that I need help writing an email asking for tech support or outlining an audit report. Sometimes the web summaries save me from clicking a result, but I usually do anyway because the things are so prone to very convincing halucinations, so yeah, utterly useless in their current state.

I usually get some angsty reply when I say this by some techbro-AI-cultist-singularity-head who starts whinging how it’s reshaped their entire lives, but in some deep niche way that is completely irrelevant to the average working adult.

I have also talked to way too many delusional maniacs who are literally planning for the day an Artificial Super Intelligence is created and the whole world becomes like Star Trek and they personally will become wealthy and have all their needs met. They think this is going to happen within the next 5 years.

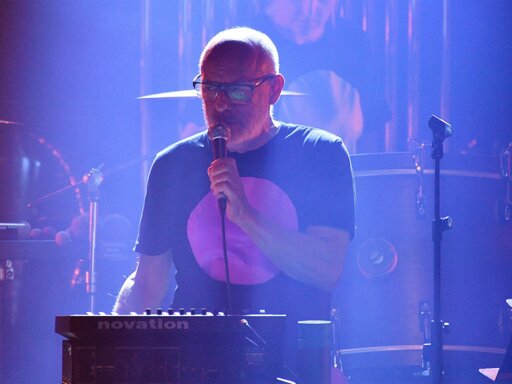

brian eno is cooler than most of you can ever hope to be.

Dunno, the part about generative music (not like LLMs) I’ve tried, I think if I spent a few more years of weekly migraines on that, I’d become better.

you mean like in the same way that learning an instrument takes time and dedication?

AI business is owned by a tiny group of technobros, who have no concern for what they have to do to get the results they want (“fuck the copyright, especially fuck the natural resources”) who want to be personally seen as the saviours of humanity (despite not being the ones who invented and implemented the actual tech) and, like all big wig biz boys, they want all the money.

I don’t have problems with AI tech in the principle, but I hate the current business direction and what the AI business encourages people to do and use the tech for.

Well I’m on board for fuck intellectual property. If openai doesn’t publish the weights then all their datacenter get visited by the killdozer

The government likes concentrated ownership because then it has only a few phonecalls to make if it wants its bidding done (be it censorship, manipulation, partisan political chicanery, etc)

And it’s easier to manage and track a dozen bribe checks rather than several thousand.

deleted by creator

That’s why we need the weights, right now! Before they figure out how to do this. It will happen, but at least we can prevent backsliding from what we have now.

And those people want to use AI to extract money and to lay off people in order to make more money.

That’s “guns don’t kill people” logic.

Yeah, the AI absolutely is a problem. For those reasons along with it being wrong a lot of the time as well as the ridiculous energy consumption.

The real issues are capitalism and the lack of green energy.

If the arts where well funded, if people where given healthcare and UBI, if we had, at the very least, switched to nuclear like we should’ve decades ago, we wouldn’t be here.

The issue isn’t a piece of software.

ai excels at some specific tasks. the chatbots they push us to are a gimmick rn.

Reminds me of “biotech is Godzilla”. Sepultura version of course

I don’t really agree that this is the biggest issue, for me the biggest issue is power consumption.

That is a big issue, but excessive power consumption isn’t intrinsic to AI. You can run a reasonably good AI on your home computer.

The AI companies don’t seem concerned about the diminishing returns, though, and will happily spend 1000% more power to gain that last 10% better intelligence. In a competitive market why wouldn’t they, when power is so cheap.

He’s not wrong.

That’s… just not true? Current frontier AI models are actually surprisingly diverse, there are a dozen companies from America, Europe, and China releasing competitive models. Let alone the countless finetunes created by the community. And many of them you can run entirely on your own hardware so no one really has control over how they are used. (Not saying that that’s a good thing necessarily, just to point out Eno is wrong)

Truer words have never been said.

I’d say the biggest problem with AI is that it’s being treated as a tool to displace workers, but there is no system in place to make sure that that “value” (I’m not convinced commercial AI has done anything valuable) created by AI is redistributed to the workers that it has displaced.

Welcome to every technological advancement ever applied to the workforce

The system in place is “open weights” models. These AI companies don’t have a huge head start on the publicly available software, and if the value is there for a corporation, most any savvy solo engineer can slap together something similar.